Let’s be blunt: the new Instagram teen account protections are necessary, but they’re also a band-aid on a deeper wound. Without strict age verification, teens (and even children) can easily lie about their birthday and slip through the cracks. Unless Meta, TikTok, Instagram, and other social platforms enforce impossible-to-bypass checks and comprehensively block loopholes, these changes are mostly about making it a little harder—not making it impossible—to see what they want to see.

That said, their efforts to utilize AI to predict user age (and categorize teens into teen accounts) is a welcomed development. So what’s the latest change? And what does it really mean for families?

Table of Contents

What Instagram Is Announcing (The Official Meta / Instagram Statement)

Meta recently published a blog post, “New PG-13 Guidelines for Instagram Teen Accounts”, laying out its updated policy for under-18 users. In short:

- All teen accounts (under 18) will default to a 13+ content setting, aligned with PG-13 movie standards.

- Teens won’t be able to opt out of this setting without a parent’s permission.

- Accounts that repeatedly post “18+ content” (sex, strong language, drug references, nudity, etc.) will be blocked from appearing in teen feeds.

- Meta will use age prediction technology to detect and place users into these protections—even if they falsely claim to be older.

- New parental controls let parents choose an even stricter “Limited Content” setting if they prefer.

- Archived content, search terms, and recommendations will be filtered more aggressively for teen accounts.

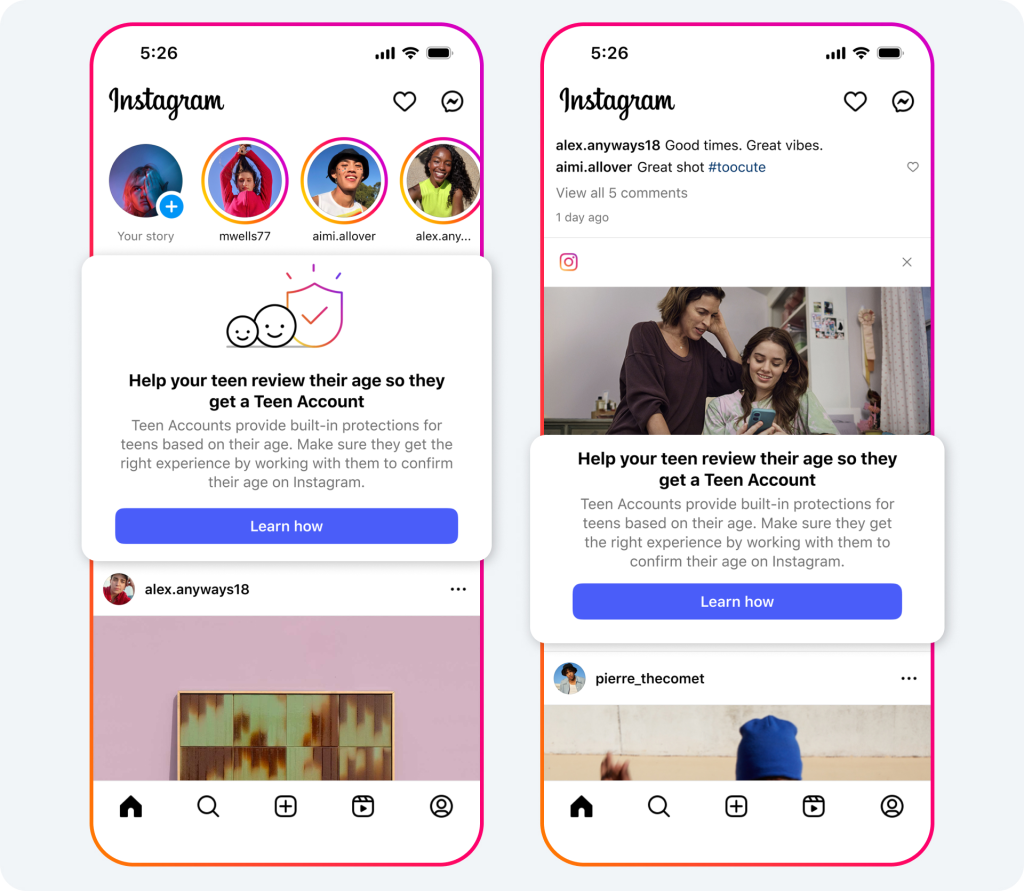

These changes build on Meta’s ongoing Instagram Teen Accounts protections and represent the biggest update since Instagram Teen Accounts first launched.

What Exactly Changes on Instagram Teens — And What Doesn’t

✅ What’s Getting Tighter

- Access to mature content will be reduced, especially from accounts posting explicit or borderline content.

- Teens won’t be able to follow or see content from accounts with flagged names or bios containing links to adult or inappropriate sites.

- Search results on Instagram Teens for terms like “gore,” “alcohol,” etc. will be blocked or filtered.

- Content already followed by teens from “adult” accounts will be hidden if it violates the new guidelines.

- Instagram’s built-in AI chatbots will now be restricted to PG-13 conversations when interacting with teen accounts.

⚠️ What Still Stays Vulnerable

- False age declarations: If a kid claims they are 18+, age filters might not apply.

- Workarounds: Multiple IG accounts, alternate usernames, VPNs, or apps outside Instagram Teens still exist. If you think this is too “complicated” for teens to figure out… think again. They are lot more tech savvy then you may think. Just because YOU don’t know how, doesn’t mean they haven’t figured it out and helped one another do it.

- Algorithm decisions: Filtering relies on content detection, so subtly explicit content or suggestive posts may slip through.

- No identity checks: Instagram is not (yet) requiring verifiable ID, facial recognition, or parental verification that can’t be bypassed. This has been a huge sticky point as gating their sign ups can lead to visible drops in overall sign ups and thus affect the perception of business health.

- Effectiveness concerns: Researchers have questioned how well Meta’s safety features really perform.

In fact, a recent study of Instagram found that 8 out of 47 safety features Meta claimed to support youth safety, only eight were consistently effective under real testing. That’s a red flag.

Why These Instagram Teen Updates Matter — But Why They Aren’t Enough

The Good:

- Public pressure worked: Meta had to respond to mounting scrutiny about teen mental health and platform harm.

- Guidelines parents understand: PG-13 is a familiar standard.

- Parental control extension: Families who want stricter settings will have more power.

- AI & predictive tech: The move to detect underage users (beyond self-declaration) is a step toward reducing abuse from misrepresented accounts.

The Limits (The “Band-Aid” Effect)

- Kids know how to lie: A birthday change takes seconds.

- Loophole exploitation: The internet has too many corners.

- Emotional & behavioral influence remains: Even sanitized content can normalize materialism, body image pressure, or self-worth tied to likes.

- Tech companies haven’t fully owned the problem: Without strong enforcement mechanisms, these rules may be more PR than real safety.

Until platforms require verified identity or robust checks (for example, linking to government-issued ID, or requiring periodic verification), we’ll be dancing around the edges of safety.

The Bigger Problem: The Nature of Social Media Itself

Even with PG-13 filters, the real issue isn’t just explicit content — it’s the entire value system that platforms like Instagram and TikTok are built upon. Constant comparison. Endless scrolling. Validation by likes. Exposure to distorted beauty, status, and identity.

You can remove the R-rated material, but the emotional and psychological damage still creeps in through the everyday feed. As we covered in our article on How TikTok & Instagram Are Rewiring Our Kids’ Brains, these platforms are engineered for dopamine addiction, shaping how young users think, feel, and even define their self-worth.

The true fix isn’t just filtering what they see — it’s limiting how much they see altogether.

What Parents & Concerned Adults Should Do

Advocate publicly for stricter regulation, verified identity, and accountability from tech firms.

Use protective tools like Covenant Eyes, which provides filtering, accountability, and reporting.

Delay, limit, or stop smartphones & social media access for younger kids rather than rushing to “give in.” – something we explore more deeply in our book review of the Tech Exit.

Consider providing a controlled locked smartphone such as the Gab phone.

Have honest dialogues about online temptation, peer pressure, and the difference between “okay” content and content that harms.

Encourage other forms of identity and worth: books, sports, service, relationships offline.

Monitor how your child uses social media—not just how much.